安装过程

零、服务器准备工作

服务器系统为Ubuntu 22.04LTS服务器版,默认关闭防火墙,准备安装cloudstack 4.18.1版本

开启ssh,启用root用户登录

设置root密码

sudo passwd root编辑 /etc/ssh/sshd_config,将PermitRootLogin 取消注释并改为yes

保存文件并重启ssh

sudo systemctl restart ssh以下配置均已root用户进行配置

配置模式为管理服务器安装数据库,做为一级存储和二级存储,加一个计算节点

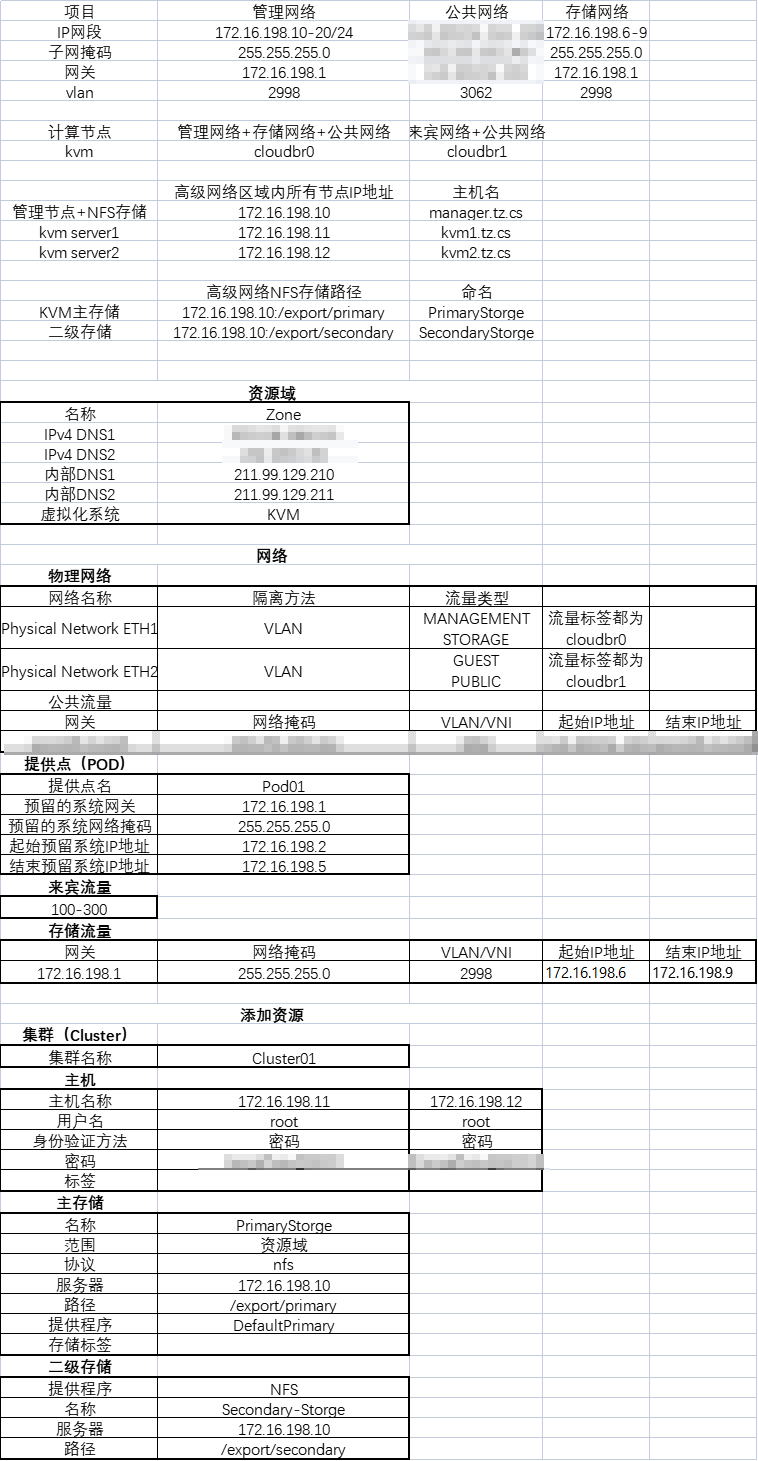

高级网络配置规划

所有服务器在开始前更换国内源

先备份

cp /etc/apt/sources.list /etc/apt/sources.list.backup编辑 /etc/apt/sources.list,替换为以下内容:清华源或者阿里源

清华源

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-backports main restricted universe multiverse

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-security main restricted universe multiverse

# # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-security main restricted universe multiverse

deb http://security.ubuntu.com/ubuntu/ jammy-security main restricted universe multiverse

# deb-src http://security.ubuntu.com/ubuntu/ jammy-security main restricted universe multiverse

# 预发布软件源,不建议启用

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-proposed main restricted universe multiverse

# # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-proposed main restricted universe multiverse阿里源

deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse然后执行更新

apt-get update

apt-get upgrade配置cloudstack的deb源

编辑 /etc/apt/sources.list.d/cloudstack.list

添加

deb http://download.cloudstack.org/ubuntu jammy 4.18添加公钥,更新缓存

wget -O - http://download.cloudstack.org/release.asc |sudo apt-key add -

apt-get update安装NTP

apt-get install chrony一、管理服务器安装

安装cloudstack-management

apt-get install cloudstack-management国内下载很慢,可以手动下载 http://download.cloudstack.org/ubuntu/dists/jammy/4.18/pool/cloudstack-common_4.18.1.0_all.deb和cloudstack-management_4.18.1.0_all.deb后上传到 /var/cache/apt/archives/partial,再执行安装命令可以跳过下载,仅进行验证

安装并配置 MySQL

apt-get install mysql-server创建文件

nano /etc/mysql/conf.d/cloudstack.cnf在其中添加

[mysqld]

server-id=1

innodb_rollback_on_timeout=1

innodb_lock_wait_timeout=600

max_connections=350

log-bin=mysql-bin

binlog-format = 'ROW'如果MySQL部署在其他服务器上,默认MySQL只能本地访问,添加 bind-address = 0.0.0.0来远程连接

server-id为纯数字

重新启动 MySQL

systemctl restart mysql在数据库上创建“cloud”用户

cloudstack-setup-databases cloud:<dbpassword>@localhost [ --deploy-as=root:<password> | --schema-only ] -e <encryption_type> -m <management_server_key> -k <database_key> -i <management_server_ip><dbpassword>为数据库分配cloud用户的密码@localhost默认为本地数据库,如果MySQL不在管理节点部署,填写部署服务器IP<password>为部署数据库root用户的密码–schema-only与 --deploy-as冲突,前者为手动配置,后者为自动配置-e <encryption_type>为数据库加密方式选择,可选项为file或者web,默认为file-m <management_server_key>替换用于加密 CloudStack 属性文件中机密参数的默认密钥,默认为password-k <database_key>替换用于加密CloudStack 数据库中机密参数的默认密钥,默认为password-i <management_server_ip>填写管理节点IP,默认为本机IP

本次配置如下

cloudstack-setup-databases cloud:'admin123456'@localhost --deploy-as=root:admin123456 -m admin123456 -k admin123456配置文件在 /etc/cloudstack/management/db.properties

在与管理服务器相同的机器上运行 KVM 管理程序,编辑 /etc/sudoers 并添加以下行:

Defaults:cloud !requiretty完成管理服务器操作系统的配置。此命令将设置 iptables、sudoers 并启动管理服务器。

cloudstack-setup-management应该得到输出消息“CloudStack Management Server setup is done”

二、准备 NFS 共享

使用管理服务器作为 NFS 服务器

安装NFS

apt-get install nfs-kernel-server查看硬盘情况

fdisk -l找到类似 /dev/sdc

如果没有分区首先创建分区

parted /dev/sdc (sda是需要挂载的磁盘)

mklabel gpt(创建gpt分区表)

mkpart primary 1 -1

p(输出结果)

q(离开菜单)此时再次查看硬盘,已经有分区 /dev/sdc1

格式化成ext4分区格式

mkfs.ext4 /dev/sdc1挂载硬盘到 /export

mount /dev/sdc1 /exportdf -h查看是否挂载成功

设置开机自动挂载,编辑 /etc/fstab,添加

/dev/sdc1 /export ext4 defaults 0 0创建两个用于主存储和辅助存储的目录:

mkdir -p /export/primary

mkdir -p /export/secondary将共享文件夹权限设为777

chmod -R 777 /export编辑 /etc/exports,插入以下行

/export *(rw,async,no_root_squash,no_subtree_check)打开共享

exportfs -a将NFS 和 rpcbind加入开机启动,重启主机,确保 NFS 和 rpcbind 正在运行

systemctl start rpcbind

systemctl start nfs-kernel-server

reboot

systemctl status rpcbind

systemctl status nfs-kernel-server使用单独的NFS服务器

则需要在管理服务器上挂载辅助存储,安装kvm管理程序

在NFS服务器上创建辅助存储,主存储(可选)

mkdir -p /export/primary

mkdir -p /export/secondary编辑 /etc/exports,插入以下行

/export *(rw,async,no_root_squash,no_subtree_check)打开共享

exportfs -a在管理服务器上创建辅助存储的挂载点

mkdir -p /export/secondary在管理服务器上挂载辅助存储

mount -t nfs nfsservername(Name or IP):/export/secondary /export/secondary下载安装KVM模板

同样国内速度慢,本地下载再上传,-u改为-f,在管理服务器上

/usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt -m /export/secondary -u http://download.cloudstack.org/systemvm/4.18/systemvmtemplate-4.18.1-kvm.qcow2.bz2 -h kvm -s <optional-management-server-secret-key> -F下载地址:http://download.cloudstack.org/systemvm/4.18/systemvmtemplate-4.18.1-kvm.qcow2.bz2

上传到/root目录下

此时命令如下:

/usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt -m /export/secondary -f /root/systemvmtemplate-4.18.1-kvm.qcow2.bz2 -h kvm -F-s <optional-management-server-secret-key>为数据库加密方式选为web的必填项

如果您使用单独的 NFS 服务器,卸载辅助存储并删除目录

umount /mnt/secondary

rmdir /mnt/secondary重启cloudstack-management

systemctl restart cloudstack-management打开 http://IP:8080/client/# ,出现503错误: MESSAGE: Service Unavailable

查看日志:/var/log/cloudstack/management/management-server.log

找到error:java.sql.SQLNonTransientConnectionException: Could not create connection to database server

github的issue:/etc/cloudstack/management/db.properties 里的 cluster.node.IP=127.0.1.1

改为 cluster.node.IP=127.0.0.1

重启cloudstack-management

systemctl restart cloudstack-management再次打开网址,默认用户名:admin,密码:password

三、节点服务器安装

安装代理

apt-get install cloudstack-agent同样国内下载很慢,可以手动下载 http://download.cloudstack.org/ubuntu/dists/jammy/4.18/pool/cloudstack-common_4.18.1.0_all.deb和cloudstack-agent_4.18.1.0_all.deb后上传到 /var/cache/apt/archives/partial,再执行安装命令可以跳过下载,仅进行验证

安装和配置 libvirt

修改 /etc/libvirt/libvirtd.conf

取消注释

listen_tls = 0

listen_tcp = 0

tls_port = "16514"

tcp_port = "16509"

auth_tcp = "none"

mdns_adv = 0修改 /etc/default/libvirtd

LIBVIRTD_ARGS="--listen"修改 /etc/libvirt/qemu.conf

取消注释

vnc_listen = 0.0.0.0重启libvirt

systemctl restart libvirtd发现报错

root@server01:~# systemctl restart libvirtd

Job for libvirtd.service failed because the control process exited with error code.

See "systemctl status libvirtd.service" and "journalctl -xeu libvirtd.service" for details.

root@server01:~# systemctl status libvirtd

× libvirtd.service - Virtualization daemon

Loaded: loaded (/lib/systemd/system/libvirtd.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Tue 2023-06-27 15:36:31 UTC; 7s ago

TriggeredBy: × libvirtd.socket

× libvirtd-admin.socket

× libvirtd-ro.socket

Docs: man:libvirtd(8)

https://libvirt.org

Process: 22286 ExecStart=/usr/sbin/libvirtd $LIBVIRTD_ARGS (code=exited, status=6)

Main PID: 22286 (code=exited, status=6)

Tasks: 2 (limit: 32768)

Memory: 5.2M

CPU: 33ms

CGroup: /system.slice/libvirtd.service

├─18337 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leaseshelper

└─18338 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leaseshelper

Jun 27 15:36:31 server01 systemd[1]: libvirtd.service: Scheduled restart job, restart counter is at 5.

Jun 27 15:36:31 server01 systemd[1]: Stopped Virtualization daemon.

Jun 27 15:36:31 server01 systemd[1]: libvirtd.service: Start request repeated too quickly.

Jun 27 15:36:31 server01 systemd[1]: libvirtd.service: Failed with result 'exit-code'.

Jun 27 15:36:31 server01 systemd[1]: libvirtd.service: Unit process 18337 (dnsmasq) remains running after unit stopped.

Jun 27 15:36:31 server01 systemd[1]: libvirtd.service: Unit process 18338 (dnsmasq) remains running after unit stopped.

Jun 27 15:36:31 server01 systemd[1]: Failed to start Virtualization daemon.运行以下命令后重启正常运行

systemctl mask libvirtd.socket libvirtd-ro.socket libvirtd-admin.socket libvirtd-tls.socket libvirtd-tcp.socket配置安全策略

检查是否安装了 AppArmor

dpkg --list 'apparmor'禁用 libvirt 的 AppArmor 配置文件

ln -s /etc/apparmor.d/usr.sbin.libvirtd /etc/apparmor.d/disable/

ln -s /etc/apparmor.d/usr.lib.libvirt.virt-aa-helper /etc/apparmor.d/disable/

apparmor_parser -R /etc/apparmor.d/usr.sbin.libvirtd

apparmor_parser -R /etc/apparmor.d/usr.lib.libvirt.virt-aa-helper配置桥接网络

使用基本网络,单网卡

编辑 /etc/netplan下yaml文件

例如 /etc/netplan/00-installer-config.yaml,如下所示

# This is the network config written by 'subiquity'

network:

ethernets:

enp130s0f0:

dhcp4: true

enp130s0f1:

dhcp4: true

enp132s0f0:

dhcp4: true

enp132s0f1:

dhcp4: true

enp134s0f0:

dhcp4: true

enp134s0f1:

dhcp4: true

enp7s0f0:

addresses:

- 172.16.198.12/24

nameservers:

addresses:

- 211.99.129.210

- 211.99.129.211

search: []

routes:

- to: default

via: 172.16.198.1

enp7s0f1:

dhcp4: true

version: 2需要更改接口为桥接,格式为上下级必须间隔两个空格,如下:

# This is the network config written by 'subiquity'

network:

ethernets:

enp130s0f0:

dhcp4: true

enp130s0f1:

dhcp4: true

enp132s0f0:

dhcp4: true

enp132s0f1:

dhcp4: true

enp134s0f0:

dhcp4: true

enp134s0f1:

dhcp4: true

enp7s0f0:

dhcp4: no

enp7s0f1:

dhcp4: true

bridges:

cloudbr0:

dhcp4: no

interfaces: [enp7s0f0]

addresses:

- 172.16.198.12/24

nameservers:

addresses:

- 211.99.129.210

- 211.99.129.211

search: []

routes:

- to: default

via: 172.16.198.1

cloudbr1:

interfaces: [vlan200]

vlans:

vlan200:

accept-ra:no

id:200

link:enp7s0f0

version: 2使用高级网络,多网卡

# This is the network config written by 'subiquity'

network:

ethernets:

enp130s0f0:

dhcp4: true

enp130s0f1:

dhcp4: true

enp132s0f0:

dhcp4: true

enp132s0f1:

dhcp4: true

enp134s0f0:

dhcp4: true

enp134s0f1:

dhcp4: true

enp7s0f0:

dhcp4: no

enp7s0f1:

dhcp4: no

bridges:

cloudbr0:

dhcp4: no

interfaces: [enp7s0f0]

addresses:

- 172.16.198.12/24

nameservers:

addresses:

- 211.99.129.210

- 211.99.129.211

search: []

routes:

- to: default

via: 172.16.198.1

cloudbr1:

dhcp4: no

interfaces: [enp7s0f1]

version: 2第二个桥接不必配置IP地址,可以连接trunk口实现多网接入

启动并配置开机自启

systemctl start cloudstack-agent

systemctl enable cloudstack-agent目前查看cloudstack-agent未启动

root@server02:~# systemctl status cloudstack-agent.service

● cloudstack-agent.service - CloudStack Agent

Loaded: loaded (/lib/systemd/system/cloudstack-agent.service; enabled; vendor preset: enabled)

Active: activating (auto-restart) since Thu 2023-06-29 09:03:35 UTC; 2s ago

Docs: http://www.cloudstack.org/

Process: 27717 ExecStart=/usr/bin/java $JAVA_OPTS $JAVA_DEBUG -cp $CLASSPATH $JAVA_CLASS (code=exited, status=0/SUCCESS)

Main PID: 27717 (code=exited, status=0/SUCCESS)

CPU: 2.606s目前为activating或者loaded,等到通过网页配置时会变成running

遇到的问题

日志文件

配置文件都在:/etc/cloudstack/

管理节点配置日志:/var/log/cloudstack/management/setupManagement.log

管理节点运行日志:/var/log/cloudstack/management/management-server.log

管理节点网页运行日志:/var/log/cloudstack/management/access.log

计算节点配置日志:/var/log/cloudstack/agent/setup.log

计算节点运行日志:/var/log/cloudstack/agent/agent.log

- 目前遇到的一种情况是由于更换地址,导致下发管理IP错误,计算主机一直请求错误的IP,导致通信失败无法添加计算主机。根据上文更改管理IP后,手动重新注册可以完成添加。

执行cloudstack-setup-agent,根据实际情况添加,注意id都是数字,web上看不到具体id,可能需要进入数据库查看。实际来说除了管理IP错误,其他都是正确的,只需要更改IP,其他默认回车就可以。

或者直接更改cloudstack-agent的配置文件/etc/cloudstack/agent/agent.properties,然后重启cloudstack-agent服务。 - 进入web页面,上传ISO时提示错误,检查发现二级存储为0,上网查询后可能是系统二级存储虚拟机的问题,试图打开虚拟机控制台卡死,将两个虚拟机重启后二级存储恢复。

- 可能会出现添加计算节点失败的问题,查看agent日志无法识别br0为网络设备,重启网卡后可以正常添加。

2 条评论

配置完桥接网络就没网了

虚拟化嵌套的问题,网卡需要开启混杂模式